Using a Python Notebook Loop through a data frame and write once to a Lakehouse Table

In this blog post I am going to explain how to loop through a data frame to query data and write once to a Lakehouse table.

The example I will use is to loop through a list of dates which I get from my date table, then query an API, append to an existing data frame and finally write once to a Lakehouse table.

Query to get a data frame of dates

In my working example I want to get a list of dates by using a query and loading it into a data frame.

Below is the code with an explanation where I am querying a list of dates for which I want to load the weather API data.

NOTE: On lines 14 – 16 is where I have my SQL query to get a list of the dates.

Validating the Query

I then validate the query to make sure that the data is returned.

Changing from Unix DateTime to Date

Because I am using DuckDB the data frame returned as shown above is in the Unix DateTime format. In the code below I am then converting it to a Date format which I can then use to loop through and pass the valid Date values.

Looping through the dates and appending to a data frame

Next, I am going to loop through the dates, query the Weather API and append it to a final data frame.

I am going to split the single piece of code into a few snippets to make it easier to understand.

- This is where I am creating or initializing an empty list which I will use to collect the output from the data frame.

- This is where I am creating the loop for each row in my data frame called “df_Weather_Date”

- I am creating a variable which I can then use in the Weather API.

- NOTE: You will see that I am converting it to a string value to get it to be passed through to the API.

- NOTE II: When looking for the values in a row, it will be in the format of row.ColumnName (Make sure that your column names do not have any spaces).

- Finally, this is where I am using my variable in step 3 to convert it to be passed through to the weather API.

The code below is where I am running the Weather API

The final code snippet below is where I am appending the empty list to a date frame and combing it to a single data frame to be used later to write to the Lakehouse table.

- This is where I am getting the Weather API output data frame called “df_weather” and appending it to my list I created earlier called “all_weather_dates_dfs”

- The final step is where I am then combining the list into a new data frame called “combined_df_weather_Detail”

Once this is run, I can see the output as part of the process.

Changing the Date and Time formats

As done in previous blog posts I am changing the date and time formats so that it can be successfully written to the Lakehouse tables.

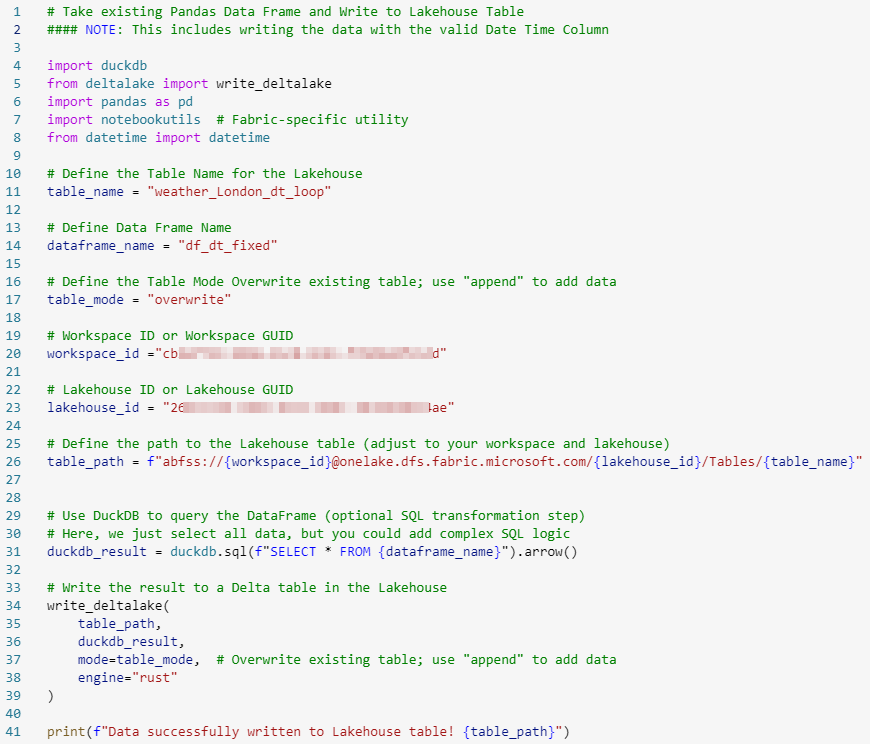

Writing once to the Lakehouse table

The final step is where I am taking my data frame and writing it once to the Lakehouse table.

NOTE: There has been a change to the version of Delta being used and it is highly recommended when writing to the Lakehouse table you ALWAYS use the “abfss” path to ensure that it can write successfully.

You will see my table path has been changed in line 26 in the code snippet shown below.

I can then validate that I can see the data in my Lakehouse table as shown below.

Summary

In this blog post I have shown you how to loop through a list of dates, combine the output from the Weather API and write it once to a Lakehouse table.

In the next blog post I will show you how to do an Insert & Update or Upsert into a Fact Table using DuckDB in a Python Notebook

You can find a link to the notebook I used above here: Fabric/Blog – Python – DuckDB – Looping and write once.ipynb at main · GilbertQue/Fabric

Thanks for reading, any comments or suggestions please let me know!

[…] Gilbert Quevauvilliers continues a series on Python notebooks and DuckDB: […]